Vendors are Promising:

80%+ Savings on Object Storage Compared To Amazon S3

We’re asking, what’s the catch?

Vendors are Promising:

80%+ Savings on Object Storage Compared To Amazon S3

We’re asking, what’s the catch?

Our writing staff is made up of cloud cost experts and senior architects. Our work has been featured in AWS’s Enterprise Strategy Blog, Linux Journal, Hacker Noon, and more.

There are no affiliate links. Cloud BS never accepts payments from vendors to publish research or show more favorable opinions.

To build this report, we:

Don’t ask me why, but I took off my adblock the other day. I don’t know how they do it, but Wasabi took up 8 out of 16 display ad slots on the page.

They were adamant, “we’re 1/5 the price of Amazon S3.”

While I’m probably on some retargeting list, the point remains:

Object storage providers are hungry to take Amazon S3’s market share.

Our take is that they will win against Amazon S3 in many use cases. Watch out, though! Unless you know what you’re getting into, you’ll get burned 🔥.

The specialized storage providers we’re focusing on are Wasabi, Backblaze B2 and Storj DCS. However, these points will apply to Digital Ocean Spaces, Linode Object Storage and Vultr Object Storage as well.

Here’s what we’ll tell you:

Vendors make bold claims. We test them. Sign up to see our tests.

Integration with other services is the most significant advantage of the big cloud storage providers like AWS, Azure, or Google Cloud Platform.

Are you doing anything other than storing and serving files? Are you analyzing your unstructured data with Amazon Athena or Google BigQuery? Low-cost providers might end up being more expensive.

To use a non-native storage service, you’ll have to pay for the additional egress and engineering time it takes to integrate.

In some cases, saving on the total cost of storage makes it worth it. In others, you’ll end up losing money.

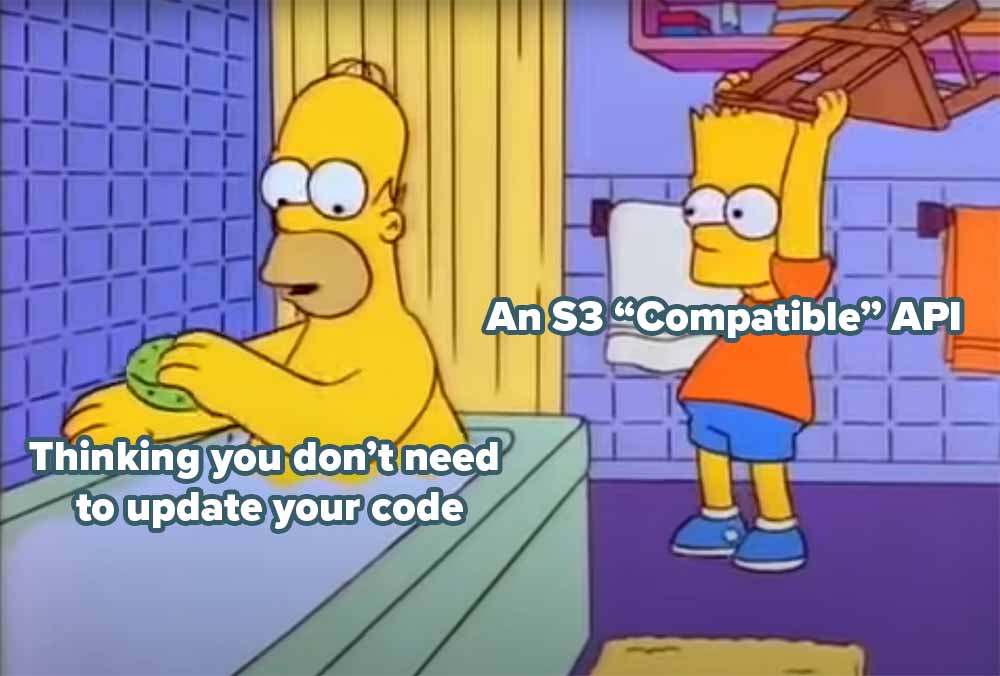

Want pre-signed URLs for your customers to upload straight to your storage safely? Unfortunately, you’re not in luck with Wasabi, or Backblaze B2. And generally, you will always have to rewrite some stuff to make things work, no matter how “100%” compatible the vendor’s S3 API is.

For example, looking at one specific aspect of the S3 API, Amazon S3 has plenty of settings on the S3 Multi-part Upload configuration that we find are rarely consistent with anyone else’s implementation of the S3 API.

To be fair, most vendors have done a decent job implementing the core components of the S3 API, but it’s imperative to know if they cover what you need that isn’t core. Several standard implementations of the S3 API have significant differences between them in compliance with the S3 standard. For example, some vendors put up endpoints that can take S3-like requests and convert them to native requests for their platform. Others don’t have a specialized endpoint, so they try to make their native endpoint look as much as possible like Amazon S3’s API.

Generally, the most significant differences between Amazon’s native API and other implementations revolve around concurrency and data transfer. Several specialized services related to S3 would be hard to run without the AWS backbone (i.e., where you pull from or how you transit data). For example, S3 Transfer Acceleration won’t be compatible with another provider because AWS uses proprietary algorithms to reroute data rapidly within AWS.

If an engineer enabled accelerated code on your storage and then has to swap that out, then it’s not codeless migration to any one of these specialized providers, which in turn impacts your TCO. If you’re lucky in this case, your engineers have a habit of wrapping code to make it agnostic.

There’s a bucket of broad-based but lower-cost providers that mete out at about 50-90% the cost of Amazon S3 ($21-23/TB/mo) for standard storage. These vendors are:

On the other hand, the specialized (i.e., storage-only) providers are much much cheaper, sometimes at under 30% of the cost of Amazon S3 for standard storage, like:

The specialized providers tend to have better data transfer fees. In addition, like Digital Ocean, Cloudflare R2, and Linode, some package together data transfer with standard storage in their pricing or offer it for free.

While the pricing of the storage-only providers is attractive, they come with “gotchas” of their own:

Amazon S3 and storage solutions provided by the broad-based Big 3 providers are the go-to choice for most enterprise use cases because of the general low latency you can achieve. Generally, you can expect lower latency if your compute instances are in the same data center or on the same backbone as your object storage service.

Need to meet a compliance requirement, like HIPAA? Or need to meet standard requirements for access management (i.e., IAM)? Unfortunately, several low-cost object storage providers don’t score well in terms of meeting compliance requirements. In contrast, others lack the management features you and/or your compliance and security officers are undoubtedly used to, including:

In addition, some of these low-cost providers don’t have some of the features you might not think you need now but could come in very handy later:

The “gotchas” shared by both Wasabi and Backblaze B2 fall into four buckets:

[Note] Not necessarily a “gotcha,” but vendors like Backblaze don’t always meet the specific compliance requirements of some industries.

The Big 3 has far more regions and zones to replicate your data across. More than one has tiers that will replicate your data inter-zonally and inter-regionally several times over by default. While smaller vendors can replicate your data as well, they generally do so within a single zone (i.e., a single data center).

If your business case requires your data to survive a catastrophic event that affects the single data center your data is stored in, Backblaze B2 and Wasabi might not be a good fit. Only sometimes do they have multiple zones within a region.

On object storage, Backblaze and Wasabi already have fewer features than the Big 3 storage alternatives. However, they are also single-purpose vendors, which can add development complexity to your team when building full-fledged applications that span many kinds of cloud services.

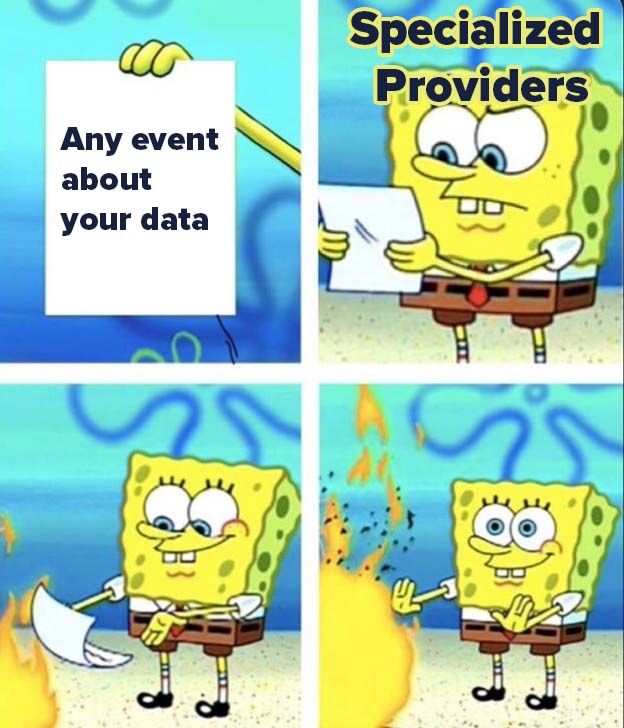

For example, let’s say you had to connect your storage service seamlessly to your data science or ML pipeline - is that going to work? The worst aspect of the feature limitations we encountered was the lack of any serverless or eventing features.

While it’s a feature of Wasabi and Backblaze B2 to have simple and/or single-tier pricing, this can also be a bug depending on the use case. Here are some examples:

Suppose money is no object and your team is willing to deal with a bit of complexity. In that case, Amazon S3, Google Cloud Storage, and Azure Blob Storage are usually better choices because of their flexibility.

Wasabi is an excellent choice for “hot archive” storage wherein you:

On the surface, Wasabi looks appealing with a simple pricing structure ($5.99/TB/mo) that comes with free egress and free operations. How can Wasabi afford this, you ask? Well, their business model relies on their user-base keeping their data stored (and unchanged) for some time and not consuming more than their fair share of resources, which they regulate via a handful of fair use policies.

This kind of setup makes Wasabi nearly perfect for use cases like storing body-cam footage. Body-cam footage tends to create lots of large video files. So, you’re going to need quick access to some files. And, you’re rarely (if ever) changing or deleting the files.

Now, if you’re off from where they want you on any of these usage dimensions, Wasabi will make you pay or show you the door, as they “reserve the right to limit or suspend your service” based on your usage patterns.

Are you transferring lots of data relative to your storage volume? You have to keep your monthly egress lower than your volume of active storage (e.g., if you have 1 TB of storage on your account, Wasabi only wants you to egress/transfer/download 1 TB a month).

Are you deleting or replacing files? Each uploaded object has a minimum storage period of 90 days. You will pay for 90 days of storage, no matter what. This policy even applies to “overwritten” files.

Example: Let’s say you upload headshot.jpg, make a change, and upload headshot.jpg again. Wasabi will charge you for both files for a minimum of 90 days.

Are you running lots of API operations relative to your storage volume? Then, Wasabi “dynamically controls” the allocation you have to run various operations based on your storage volume.

Bottom Line: If you need hot archival storage, Wasabi is an excellent platform. If you’re not a good fit, as shown above, Wasabi can cause many headaches.

The industry standard set by Amazon S3 is multi-AZ (availability zone) redundancy. After saving your files, they get backed up across multiple physical data centers. This is not the case with Backblaze B2.

When you use a Big 3 provider, like Azure Blob Storage or Amazon S3, you’re often paying for reliable storage that a vast swath of enterprises with low-risk tolerance can bet the farm on. Azure has a wide range of options for replication (which has a considerable impact on the reliability of your storage), including LRS (Low Redundancy Storage) if you want to save some cost. Amazon S3, by default, replicates that across three separate zones. To get the equivalent on Backblaze B2, well… you need to pay for it as their bargain pricing is for a single zone only. If, like many other organizations, you need multi-AZ redundancy, it costs about twice as much.

Storj DCS is the least expensive option listed among all cloud object storage providers. It also has, theoretically, the highest level of data redundancy because they have a tokenized economy that pays a decentralized network of node operators to store data for you all across the world. And, despite hosting theoretically everywhere with a bunch of parties unknown to you, your data is impossible to read by anyone other than you — it’s default end-to-end encryption.

In short, here’s how it works. Storj:

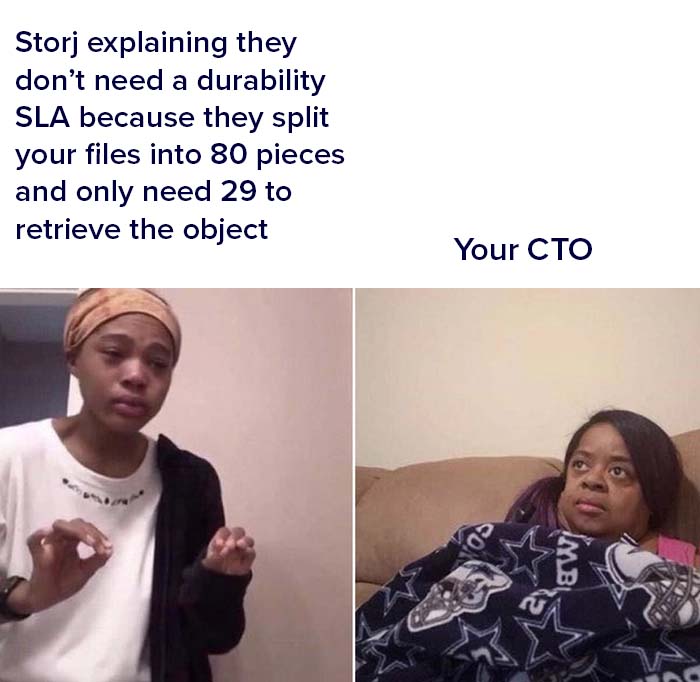

Their tech solves issues like “what if a node goes offline” and more, but we’re left with two main problems possibly inherent to the technology.

**There is no data redundancy SLA for Storj DCS. **So how do you explain that to your CTO/CIO/VP/SRE? To their credit, Storj DCS has enterprise-grade SLAs for most other aspects of the storage service, and it stands to reason that data redundancy should be pretty good thanks to its sprawling global network. However, for some companies, a data redundancy SLA may be a challenging requirement to work around.

Unfortunately, Storj DCS’s network and economics do not work if you store lots of small files. (IoT use cases with billions of 4K files, we’re looking at you!) Storj DCS’s smallest billing increment for a file is 64MB, and you’ll be charged $0.0000088 for each file under that threshold or each incremental 64MB part of a larger file.

Cloudflare R2 has caught the eyes of the industry by claiming they’ll offer free egress.

The Cloud BS team has already been talking to the Cloudflare team about running tests as soon as possible. If you want to get that content before it’s public, sign up for our newsletter.

Cloudflare has made bold claims. We'll test them. Sign up to see our tests.